Every search Engine crawling bot first interacts with the robots.txt file and crawling rules of the website. That means the robots.txt plays a critical role in the search engine optimization of the Blogger blog. This article will explain how to create a perfect custom robots.txt file in the Blogger and optimize the blog for search engines.

What are the functions of the robots.txt file?

The robots.txt file informs the search engine about the pages which should and shouldn’t crawl. Hence it allows us to control the functioning of search engine bots.

Blogger robots.txt for best SEO

In the robots.txt file, we declare user-agent, allow, disallow, sitemap functions for search engines like Google, Bing, Yandex, etc. Let’s understand the meaning of all these terms.

Usually, we use robots meta tags for all search engines crawling bots to index blog posts and pages throughout the web. But if you want to save crawling budget, block search engine bots in some sections of the website, you’ve to understand the robots.txt file for the Blogger blog.

Analyze the default Robots.txt file of the Blogger Blog

To create a simple custom robots.txt file for the Blogger blog. First, we’ve to understand the Blogger blog structure and analyze the default robots.txt file.

By default, this simple file look likes:

The robots.txt file informs the search engine about the pages which should and shouldn’t crawl. Hence it allows us to control the functioning of search engine bots.

Blogger robots.txt for best SEO

In the robots.txt file, we declare user-agent, allow, disallow, sitemap functions for search engines like Google, Bing, Yandex, etc. Let’s understand the meaning of all these terms.

Usually, we use robots meta tags for all search engines crawling bots to index blog posts and pages throughout the web. But if you want to save crawling budget, block search engine bots in some sections of the website, you’ve to understand the robots.txt file for the Blogger blog.

Analyze the default Robots.txt file of the Blogger Blog

To create a simple custom robots.txt file for the Blogger blog. First, we’ve to understand the Blogger blog structure and analyze the default robots.txt file.

By default, this simple file look likes:

----------------------------------------------

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Allow: /

Sitemap: https://www.example.com/sitemap.xml

-------------------------------------------------

The first line (User-Agent) of this file declares the bot type. Here it’s Google AdSense, which is disallowed to none(declared in 2nd line). That means the AdSense ads can appear throughout the website.

The next user agent is *, which means all the search engine bots are disallowed to /search pages. That means disallowed to all search and label pages(same URL structure).

And allow tag define that all pages other than disallowing section will be allowed to crawl.

The next line contains a post sitemap for the Blogger blog.

This is an almost perfect file to control the search engine bots and provide instruction for pages to crawl or not crawl. Please note, here, what is allowed to crawl will not make sure that the pages will index.

But this file allows for indexing the archive pages, which can cause a duplicate content issue. That means it will create junk for the Blogger blog.

Youtube video : How to Add Custom Robots.txt in Blogger Blog

How Create a Perfect custom robots.txt file for the Blogger Blogspot

We understood how to default robots.txt file perform its function for the Blogger blog. Let’s optimize it for the best SEO.The default robots.txt allows the archive to index that causes the duplicate content issue. We can prevent the duplicate content issue by stopping the bots from crawling the archive section. For this,/search* will disable crawling of all search and label pages.

Apply a Disallow rule /20* into the robots.txt file to stop the crawling of archive section.

The /20* rule will block the crawling of all posts, So to avoid this, we’ve to apply a new Allow rule for the /*.html section that allows the bots to crawl posts and pages.

The default sitemap includes posts, not pages. So you have to add a sitemap for pages located under https://example.blogspot.com/sitemap-pages.xml or https://www.example.com/sitemap-pages.xml for custom domain. You can submit Blogger sitemaps to Google Search Console for good results.

So the new perfect custom robots.txt file for the Blogger blog will look like this.

-------------------------------------------

User-agent: Mediapartners-Google

Disallow:

#below lines control all search engines, and blocks all search, archieve and allow all blog posts and pages.

User-agent: *

Disallow: /search*

Disallow: /20*

Allow: /*.html

#sitemap of the blog

Sitemap: https://www.example.com/sitemap.xml

Sitemap: https://www.example.com/sitemap-pages.xml

--------------------------------------------------

Above file, the setting is best robots.txt practice as for SEO. This will save the website’s crawling budget and help the Blogger blog to appear in the search results. You have to write SEO-friendly content to appear in the search results.

But if you want to allow bots to crawl the complete website, the best possible setting for robots.txt and robots meta tag, try advanced robots meta tag and robots.txt file. The combination is one of the best practices to boost the SEO of the Blogger blog.

How to edit the robots.txt file of the Blogger blog?

This Robots.txt file is located at the root level of the website. But in Blogger, there is no access to root, so how to edit this robots.txt file?

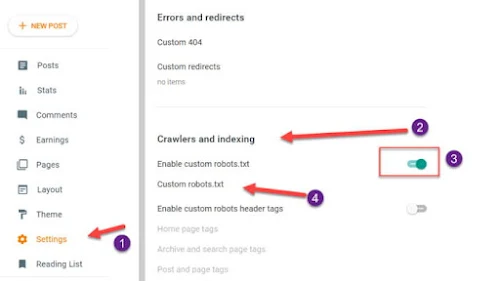

Provide custom robots.txtGo to Blogger Dashboard and click on the settings option,

Scroll down to crawlers and indexing section,

Enable custom robots.txt by the switch button.

Click on custom robots.txt, a window will open up, paste the robots.txt file, and update.

In the default robots.txt file, the archive section is also allowed to crawl, which causes duplicate content issues for the search engine. And hence search engine gets confused about what to display in the search result and do not consider your pages for the search result.

It means the Robots tags are essential for the SEO of a website. You can consider combining both robots.txt and robots meta tag in the Blogger blog for the best results. You can also download responsive and SEO-friendly templates for the Blogger blog.

Reblog / Link : https://seoneurons.com/blogger/perfect-robots-txt-file/